We introduce a novel face liveness system, KBY-AI Face Livenes Detection SDK, that uses the front camera on commodity smartphones. Our system reconstructs 3D models of users’ faces in order to defend against 2D-spoofing attacks for using on selfie-based authentication.

Facial authentication is becoming increasingly popular on smartphones due to its convenience and the improved accuracy of modern face recognition systems. However, most mainstream facial recognition technologies still rely on traditional 2D methods, making them vulnerable to spoofing attacks. Existing security solutions use specialized hardware, such as infrared dot projectors and dedicated cameras, but these methods do not align with the smartphone industry’s push for maximizing screen space.

This article introduces KBY-AI Face Liveness Detection, an innovative face anti-spoofing system designed for standard smartphones with a single front camera. Our solution leverages the smartphone screen to illuminate the user’s face from multiple angles, enabling photometric stereo-based 3D facial reconstruction. By analyzing depth-based face recognition features, our system effectively distinguishes real faces from 2D spoofing attempts.

To enhance security against replay attacks, we introduce a dynamic light passcode—a sequence of random light patterns displayed on the screen during authentication. We tested FaceRevelio with 30 users across various lighting conditions and against multiple face spoofing techniques, including photo and video attacks. The results demonstrate that with just a 1-second light passcode, FaceRevelio achieves an Equal Error Rate (EER) of 1.4% against photo attacks and 0.15% against video attacks, ensuring highly secure and reliable facial authentication.

Introduction of KBY-AI Face Liveness Detection

With the increasing reliance on smartphones in all aspects of daily life, secure user authentication is essential for protecting private information and enabling safe mobile payments. In recent years, face authentication has gained popularity as a promising alternative to traditional password-based security mechanisms. However, most existing face authentication systems rely on conventional 2D face recognition technologies, which are vulnerable to spoofing attacks using 2D photos, videos, or even 3D masks to bypass authentication.

Recently, some smartphone manufacturers have integrated face liveness detection into their high-end devices, such as the iPhone X/XR/XS and HUAWEI Mate 20 Pro. These phones feature specialized hardware components embedded in their screens to capture the 3D structure of the user’s face. For instance, Apple’s TrueDepth system employs an infrared dot projector along with a dedicated infrared camera, complementing the traditional front camera.

While effective, incorporating such specialized hardware often requires design compromises, such as adding a notch to the screen, which goes against the industry’s bezel-less trend. As consumers increasingly demand higher screen-to-body ratios, manufacturers are exploring alternative solutions. For example, Samsung introduced the Galaxy S10 as its first phone with face authentication and an Infinity-O hole-punch display. However, due to its lack of dedicated hardware for capturing facial depth, the S10 remained vulnerable to 2D photo and video spoofing attacks.

This raises a key question: How can we enable face liveness detection on smartphones using only a single front camera?

Previous research on face liveness detection against 2D spoofing attacks has primarily relied on computer vision techniques to analyze facial textural features, such as nose and mouth details and skin reflectance. However, these methods often require ideal lighting conditions, which are difficult to ensure in real-world scenarios. Another common approach is challenge-response authentication, where users must respond to random prompts, such as pronouncing a word, blinking, or performing facial gestures. However, these techniques are unreliable, as modern technologies, such as media-based facial forgery, can convincingly simulate facial movements.

A time-constrained protocol was recently introduced to mitigate these attacks, but it still required users to perform specific expressions. The additional time and effort involved, along with the need for user cooperation, make these protocols impractical in many situations, particularly for elderly users and in emergency cases.

In this article, we introduce a novel face liveness detection system, KBY-AI face liveness detection SDK, that uses the front camera on commodity smartphones. Our system reconstructs 3D models of users’ faces in order to defend against 2D-spoofing attacks.

KBY-AI face liveness detection SDK exploits smartphone screens as light sources to illuminate the human face from different angles. Our main idea is to display combinations of light patterns on the screen and simultaneously record the reflection of those patterns from the users’ faces via the front camera. We employ a variant of photometric stereo to reconstruct 3D facial structures from the recorded videos.

To this end, we recover four stereo images of the face from the recorded video via a least squared method and use these images to build a normal map of the face. Finally, the 3D model of the face is reconstructed from the normal map using a quadratic normal integration approach.

From this reconstructed model, we analyze how the depth changes across a human face compared to model reconstructed from a photograph or video and train a deep neural network to detect various spoofing attacks.

Implementing our idea of reconstructing the 3D face structure for face liveness detection using a single camera involved a series of challenges.

First, displaying simple and easily forgeable light patterns on the screen makes the system susceptible to replay attacks. To secure our system from replay attacks, we designed the novel idea of a light passcode, which is a random combination of patterns in which the screen intensity changes during the process of authentication, such that an attacker would be unable to correctly guess the random passcode. Second, in the presence of ambient lighting, the intensity of the reflection of our light passcode was small, hence difficult to separate from ambient lighting.

In order to make KBY-AI face liveness detection SDK practical in various realistic lighting conditions, we carefully designed light passcodes to be orthogonal and “zero-mean” to remove the impact of environment lighting.

In addition, we had to separate the impact of each pattern from the mixture of captured reflections to accurately recover the stereo images via the least square method. For this purpose, we linearized the camera responses by fixing camera exposure parameters and reversing gamma correction.

Finally, the unknown direction of lighting used in the four patterns causes an uncertainty in the surface normals computed from the stereo images which could lead to inaccurate 3D reconstruction. We designed an algorithm to find this uncertainty using a general template for human surface normals. We used landmark-aware mesh warping to fit this general template to users’ face structures.

KBY-AI face livness detection SDK is implemented as a prototype system on Samsung S10 smartphone. By collecting 4900 videos with a resolution of 1280 × 960 and a frame rate of 30fps, we evaluated KBY-AI SDK with 30 volunteers under different lighting conditions. KBY-AI face liveness detection SDK achieves an EER of 1.4% for both dark and day light settings, respectively against 2D printed photograph attacks.

It detects the replay video attacks with an EER of 0.0%, and 0.3% for each lighting, respectively. The contributions in this article are summarized as follows:

- We designed a face liveness detection system for standard smartphones using only a single front camera to reconstruct the 3D facial surface, without requiring additional hardware or user cooperation.

- We introduce the concept of light passcodes, which use randomly generated lighting patterns across four screen quadrants. Light passcodes enable 3D structure reconstruction from stereo images and, more importantly, enhance security against replay attacks.

- We implemented KBY-AI face liveness detection SDK as an Android application and evaluated its performance with 30 users across various scenarios. The results demonstrate its applicability and effectiveness in real-world conditions.

Background

In this section, we introduce photometric stereo and explain its role in 3D reconstruction under both known and unknown lighting conditions. Photometric stereo is a technique that recovers an object’s 3D surface using multiple images, where the object remains fixed while the lighting varies.

Computing Normals Under Known Lighting Conditions

Beyond the standard assumptions of photometric stereo (e.g., point light sources, uniform albedo), we assume that illumination is known. Given three point light sources, the surface normal vectors S can be determined by solving the following linear equation based on two known variables:

![]()

where I = [I₁, I₂, I₃] represents the three stacked stereo images captured under different lighting conditions, and L = [L₁, L₂, L₃] denotes the corresponding lighting directions. To accurately compute the surface normals, at least three images with varying illumination are required, ensuring that the normals are properly constrained.

Computing Normals Under Unknown Lighting Conditions

Now, we consider the scenario where lighting conditions are unknown. In this case, the matrix of intensity measurements is denoted as M, where M has a size of m × n, with m representing the number of images and n the number of pixels per image. Therefore:

![]()

To solve this approximation, M is factorized using Singular Value Decomposition (SVD). Applying SVD, the following decomposition is obtained:

![]()

This decomposition can be used to recover L and S in the form of LT = U√ΣA and S = A−1√ΣVT, where A is an 3× 3 linear ambiguity matrix.

KBY-AI Face Liveness Detecion SDK Overview

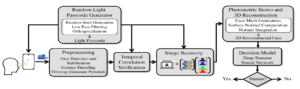

KBY-AI SDK is a face liveness detection system designed to protect against various spoofing attacks on face authentication systems. Figure 1 provides an overview of the KBY-AI face liveness detection SDK architecture. The process begins by dividing the phone screen into four quadrants, each serving as a light source. The Random Light Passcode Generator module selects a random light passcode, which consists of four orthogonal light patterns displayed across the four quadrants of the phone screen.

The front camera records a video clip capturing the reflection of these light patterns from the user’s face. These patterns serve a dual purpose: they are used during the video recording and also aid in reconstructing the 3D structure of the face and detecting replay attacks. The recorded video is then processed through a preprocessing module, where the face region is first extracted and aligned in each consecutive video frame. Next, an inverse gamma calibration is applied to each frame to ensure a linear camera response.

Finally, the video is processed by constructing its Gaussian Pyramid, where each frame is smoothed and subsampled to remove noise. After preprocessing, a temporal correlation between the passcode in the video frames and the one generated by the Random Light Passcode Generator is assessed.

If a high correlation is verified, the filtered video frames, along with the random light passcode, are passed into the Image Recovery module. The objective of this module is to recover the four stereo images corresponding to the four light sources by leveraging the linearity of the camera response.

The recovered stereo images are then used to compute face surface normals under unknown lighting conditions using a variant of the photometric stereo technique. A generalized human normal map template, along with its 2D wireframe mesh connecting the facial landmarks, is employed to compute these normals accurately. Finally, a 3D face is reconstructed from the surface normals using a quadratic normal integration method.

Once the 3D structure is reconstructed, it is passed to the liveness detection decision model. In this model, a Siamese neural network is trained to extract depth features from both a known sample human face depth map and the reconstructed candidate 3D face. These feature vectors are then compared using L1 distance and a sigmoid activation function to generate a similarity score for the two feature vectors. The decision model classifies the 3D face as a real human face if the score exceeds a threshold, and detects a spoofing attack otherwise.

Face Liveness Detection

KBY-AI face liveness detection SDK is designed to protect against two major types of spoofing attacks: 2D printed photo attacks and video replay attacks.

2D Printed Photograph Attack:

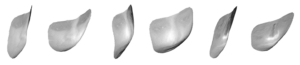

To protect against 2D printed photograph attacks, it is essential to determine whether the reconstructed 3D face belongs to a real, live person or a printed photograph. Figure 2 illustrates examples of 3D reconstructions from a printed photograph using the approach outlined in the previous section.

It is worth noting that the same general human face normal map template is used to compute the surface normals of a photograph. As a result, the overall structure of the reconstructed model resembles a human face. However, even with the use of this human normal map template, the freedom provided by solving for A is limited to just 9 dimensions.

Therefore, despite having a similar structure, the reconstruction from the 2D photograph lacks depth details in facial features, such as the nose, mouth, and eyes, as clearly shown in the examples in Figure 2.

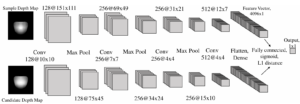

Based on these observations, we use a deep neural network to extract facial depth features from the 3D reconstruction and classify it as either a human face or a spoofing attempt. For this purpose, we train a Siamese neural network, adapted from previous work. The Siamese network consists of two parallel neural networks with identical architecture, but different inputs. One network receives a known depth map of a human face, while the other processes the candidate depth map obtained from the 3D reconstruction.

Therefore, the input to the Siamese network consists of a pair of depth maps. Each neural network in the Siamese architecture generates a feature vector for its respective input. These feature vectors are then compared using L1 distance, followed by a sigmoid activation function. The final output of the Siamese network is the probability that the candidate depth map corresponds to a real human face.

Video Replay Attacks

KBY-AI face livness detection SDK employs a two-fold approach to defend against video replay attacks. The first line of defense leverages the randomness of the passcode. When a human subject attempts to authenticate via the KBY-AI SDK, the passcode displayed on the screen is reflected by the face and captured by the camera.

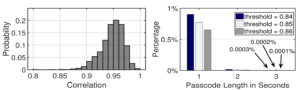

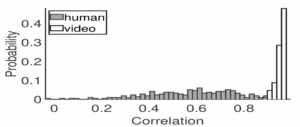

As a result, the average brightness of the video frames over time shows a strong correlation with the light incident on the face, which corresponds to the sum of the four patterns in the passcode displayed on the screen. Figure 4 (left) illustrates the distribution of the correlation between the recorded passcodes and the original passcode in experiments conducted with humans.

In the Figure 4 above, (left) displays the distribution of correlation between recorded passcodes from the human face and the original passcode. (right) shows the percentage of passcodes that have a correlation with a randomly selected passcode greater than a threshold, for various threshold values.

The correlation between the two passcodes is greater than 0.85 in over 99.9% of the cases. An adversary may attempt to spoof our system by recording a video of a genuine user while using the KBY-AI SDK and later replaying this video on a laptop screen or monitor in front of the phone.

In this case, the video frames captured by the camera will contain reflections of the passcode from the phone screen, as well as the passcode present in the replayed video.

For the rare cases when the correlation exceeds the pre-defined threshold, our second line of defense is activated. Similar to 2D photograph attacks, video replay attacks can also be detected using the reconstructed 3D model. The reconstruction from the replayed video faces two main issues.

First, it is challenging for the adversary to accurately synchronize the playback of the attack video with the start of the passcode display on the smartphone. Second, even if the correlation passes the threshold, there will still be discrepancies between the replayed passcode and the passcode generated by the KBY-AI face liveness detection SDK.

As a result, the DTW (Dynamic Time Warping) matching will not align the recorded video frames with the displayed passcode effectively. Consequently, the surface normals and 3D reconstruction derived from these mismatched stereo images fail to capture the correct 3D features of the face, making it possible to identify the spoofing attempt.

Evaluation

To evaluate the performance of KBY-AI face liveness detection SDK, we address the following questions:

What is the overall performance of KBY-AI face liveness detection SDK?

To assess the overall performance of our system, we evaluated its ability to defend against 2D printed photograph attacks and video replay attacks. We report the system’s accuracy in terms of the true and false accept rates under two different lighting conditions. Additionally, we calculate the equal error rate (EER) for these attacks.

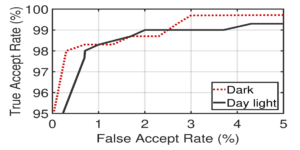

Figure 5. ROC curve for detecting photo attacks under dark and daylight conditions with a 1-second passcode shows high detection performance. The detection rate is 99.7% in the dark setting and 99.3% in the daylight setting when the true accept rate is 98% and 97.7%, respectively.

First, we evaluate our system’s performance against printed photograph attacks. Figure 5 presents the ROC curve for KBY-AI SDK’s defense against photo attacks under dark and daylight conditions, with a passcode duration of 1 second.

In the dark setting, the system achieves a false accept rate of only 0.33% when the true accept rate is 98%, meaning that photo attacks are detected with an accuracy of 99.7%, while real users are rejected in 2% of cases. The equal error rate (EER) for the dark setting is 1.4%.

In daylight conditions, the system detects photo attacks with an accuracy of 99.3% when the true accept rate is 97.7%, with an EER of 1.4% as well.

KBY-AI face liveness detection SDK performs better in dark settings because the impact of the light passcode is more pronounced when ambient lighting is weaker. As a result, the signal-to-noise ratio in the recorded reflections from the face is higher, leading to improved 3D reconstruction accuracy.

Figure 6. Distribution of the correlation between the passcode on the phone and the camera response from real human and video attack combined for dark and daylight setting.

We also evaluated our system against video replay attacks by using videos collected from the volunteers during the experiments. Each video was played on a Lenovo ThinkPad laptop with a screen resolution of 1920 × 1080, positioned in front of a Samsung S10 running the KBY-AI SDK. Our system successfully detected these video replay attacks, achieving an EER of 0% in dark settings and 0.3% in daylight settings.

Figure 6 presents a histogram of the correlation between the passcode displayed on the phone and the camera response across all experiments using a 1-second passcode. The correlation for all attack videos remains below 0.9, whereas 99.8% of videos from real human users exhibit a correlation above 0.9.

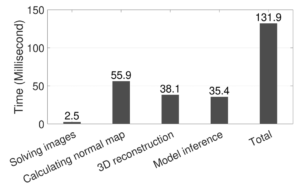

Figure 7. Processing time of the different modules of the system for a passcode of 1s duration.

Another key performance metric is the total time required for liveness detection using the KBY-AI Face Liveness Detection SDK. Figure 7 illustrates the processing time of different system modules.

Beyond the signal duration of the passcode, the liveness detection process takes only 0.13 seconds in total. The stereo image recovery is highly efficient, requiring just 3.6 milliseconds. The most computationally expensive step is normal map computation, which takes 56 milliseconds, as it involves two 2D warping transformations. The 3D reconstruction process requires 38.1 milliseconds, while feature extraction and comparison via the Siamese network take 35.4 milliseconds.

How well does KBY-AI face liveness detection SDK perform in indoor lighting?

To evaluate the effect of indoor lighting, we conducted experiments with 10 volunteers in a room with multiple lights on. The objective was to determine whether additional artificial lighting affected the efficacy of our light passcode mechanism.

In these experiments, we used 1-second passcodes. At a true accept rate of 98%, KBY-AI Face Liveness Detection SDK achieved an accuracy of 99.7% against 2D attacks, with an equal error rate (EER) of 1.4%, which is comparable to the dark setting.

These results indicate that the SDK maintains its effectiveness even in the presence of artificial indoor lighting.

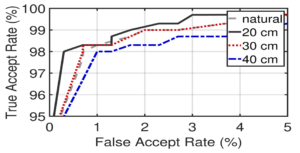

Figure 8. ROC curve for different face to smartphone screen distances with a passcode duration of 1s.

What is the impact of the smartphone’s distance from the face on KBY-AI face liveness detection SDK’s performance?

We evaluated the effect of the distance between the face and the smartphone screen by conducting experiments with 10 volunteers.

First, we asked the volunteers to hold the smartphone naturally in front of their face, ensuring their face was within the camera view, and to use our system. We measured the distance in this scenario for each volunteer and found that the average distance between the face and the screen during these experiments was 27 cm.

Subsequently, we instructed the volunteers to use the KBY-AI Face Liveness Detection SDK while holding the smartphone at various distances from their face, specifically at 20 cm, 30 cm, and 40 cm.

Figure 8 shows the ROC curve for the KBY-AI Face Liveness Detection SDK’s performance against 2D attacks at various distances between the face and the smartphone screen. At both the natural distance and 30 cm, the KBY-AI face liveness detection system detects the 2D attack with an accuracy of 99.3% when the true accept rate is 98%.

The detection accuracy improves to 99.7% with a true accept rate of 98% when the distance between the face and the screen is reduced to 20 cm. Additionally, we observe that KBY-AI SDK’s detection accuracy remains consistent at 99.3% even when the smartphone’s distance from the face is increased to 40 cm.

This demonstrates that the KBY-AI Face Liveness Detection SDK can effectively defend against spoofing attempts even at relatively larger distances. While the true accept rate slightly decreases to 96.7% at 40 cm, this minor reduction does not affect usability—since users typically hold their phones closer—nor does it compromise security, as the detection accuracy remains high.

Does the orientation of the face affect KBY-AI face liveness detection SDK’s performance?

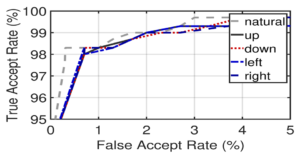

To assess the impact of face orientation on KBY-AI face liveness detection’s performance, we first asked volunteers to hold their phones naturally, keeping their faces vertically aligned with the smartphone screen while using our system. Next, we instructed them to rotate their heads up, down, left, and right, conducting trials for each face orientation.

Figure 9 presents the performance of KBY-AI face liveness detection SDK across different face orientations. In the natural face position, the system achieves a detection accuracy of 99.7% with a true accept rate of 98.3%. This demonstrates the robustness of the SDK in authenticating real users while effectively preventing 2D spoofing attempts.

KBY-AI face liveness detection SDK can detect the 2D attacks with an accuracy of 99.3% with a true accept rate of 98%, 98.3%, 98% and 98.3% for the up, down, left and right face orientations respectively.

The Equal Error Rate (EER) for natural face orientation and all four rotated face poses (up, down, left, right) is 1.44%. This indicates that KBY-AI face liveness detection SDK maintains consistent and robust performance across different face orientations while effectively detecting 2D spoofing attacks.

Frequently Asked Questions

What is face liveness detection?

Face liveness detection is a technology used to determine whether a face presented to a system is real (from a live person) or fake (such as a photo, video, or mask). It is commonly used in biometric authentication systems to prevent spoofing attacks.

What kind of face liveness detection solutions does KBY-AI provide?

KBY-AI provides customers with powerful face passive liveness detection, face active liveness detection and 3D depth-based liveness detection.

Does KBY-AI SDKs supoprt cross compile for multi-platform?

Yes, every their SDK includes mobile version(Android, iOS, Flutter, React-Native, Ionic Cordova), C# version and server version.

How can I know the price detail for KBY-AI SDKs?

You can contact them through Email, Whatsapp, Telegram or Discord, etc through Contact Us page below.

Is the image or data stored?

No, KBY-AI face liveness detection SDK works fully offine and on-premises solution.

Conclusion

This article proposes a secure liveness detection system, KBY-AI face liveness detection SDK, that uses a single smartphone camera with no extra hardware. KBY-AI face liveness detection SDK uses the smartphone screen to illuminate the human face from various directions via a random light passcode.

The reflections of these light patterns from the face are recorded to construct the 3D surface of the face. This is used to detect if the authentication subject is a human or not. KBY-AI face liveness detection SDK achieves a mean EER 1.4% and 0.15% against photo and video replaying attacks, respectively.