Palmprint recognition is a biometric authentication method that uses the unique patterns, textures, and structures found on the human palm to identify individuals. It has gained popularity due to its high accuracy, robustness, and ease of use in various security and identification systems.

Palmprint recognition is a powerful tool in the field of biometrics, offering a blend of accuracy and convenience. With advancements in technology, it is poised to play a significant role in security and identification systems worldwide.

Advantages of Palmprint Recognition Technology

As one of the newly emerging biometric recognition technologies, palmprint recognition can extract stable, noise-resistant and recognizable features from low-resolution images. Compared with other biometric recognition technologies, palmprint recognition technology has the following advantages:

- Compared with fingerprints, palmprints have a larger recognition area, contain richer information, and are less susceptible to damage than fingerprints and have long-term stability. Palmprint recognition does not require a particularly high resolution for the image, so the cost of the acquisition equipment is much lower than that of fingerprints.

- Compared with faces, palm prints are not affected by factors such as glasses, expressions, and makeup, and are more stable. In terms of user acceptance, the palm print collection method is more user-friendly.

- Although the recognition accuracy of palmprints is not as good as that of irises and DNA, the cost of palmprint collection equipment is much lower than that of the collection equipment of these two biometric recognition technologies.

- Compared with behavioral characteristics such as signatures and gait, palmprint recognition is not affected by people’s habits, the characteristics will not change over time, and the recognition accuracy is much higher than behavioral characteristics.

In summary, palmprint recognition has the advantages of high recognition accuracy, low acquisition equipment cost, high stability, and high user acceptance, and its application in life is becoming more and more extensive.

Basic Steps Of Palmprint Recognition

The palmprint recognition process is divided into database creation and feature database retrieval.

- Database creation is done by collecting registered samples, performing preprocessing and feature extraction, and then forming a database

- Feature library retrieval collects samples to be tested, and after preprocessing and feature extraction, matches them with the data in the palmprint database to obtain the category of the sample to be tested.

Palmprint Feature Extraction Method

Palmprint feature extraction methods are mainly divided into four categories, namely structure-based methods, statistics-based methods, subspace-based methods and coding-based methods.

The structure-based method mainly uses the direction and position information of the main lines and wrinkles in the palmprint to realize palmprint recognition. This method is the most intuitive. However, no matter which edge detection operator is used, it is impossible to extract all the lines, so this method is very poor in practicality and has been gradually abandoned.

The statistical method mainly uses statistical features, such as mean, variance, etc. to form a set of feature vectors used to describe the palm print image. It can be divided into local statistical variable method and global statistical method according to whether it is divided into blocks. The local statistical method divides the image into several small blocks, counts the statistical information of each small block, and then combines these statistical information to represent the statistical feature vector of the entire palm print. For example, Fourier transform, wavelet transform, etc. are used to obtain the statistical information of each block of the palm print image and perform recognition.

The subspace-based method regards the original palmprint image as an ordinary picture pattern, and transforms the high-dimensional matrix corresponding to the picture into a low-dimensional vector or matrix through projection mapping operation. According to the implementation method of projection transformation, it is divided into linear subspace method and nonlinear subspace method. Commonly used subspace feature extraction methods include principal component analysis (PCA), FisherPalm method, BDPCA (Bi-directional PCA), etc.

The coding-based method regards the palm print image as a texture image and encodes the texture image according to certain rules. Zhang et al. proposed a coding method called PalmCode, which first uses 2DGabor to filter the image, and then encodes it according to the positive and negative of the real and imaginary parts of the filtering results. Kong et al. proposed using six-directional Gabor filters to filter the palm print image and encode the direction with the smallest amplitude, which is called competitive coding. Since competitive coding examines the directional information of the palm print image and is not sensitive to light, the recognition accuracy is very high.

EDCC Approach

Palmprints are full of line and texture features and have rich directional information. Therefore, directional coding is considered to be the most effective method for extracting palmprint features. Competitive coding is one of the highly recognizable coding methods. It uses filters in different directions to convolve with the palmprint image, and then encodes the palmprint image according to certain coding rules.

The EDCC algorithm has the following key points:

- The original palmprint image is processed by the image enhancement operator to make the lines more prominent and the extracted direction more accurate.

- The image is filtered using a set of 2D Gabor wavelet filters with different orientations.

- The directions with the largest and second largest filter response values are selected as the main and secondary directions of the ridge line where the point is located, and then encoded.

These three key points are explained in detail below.

Image Enhancement

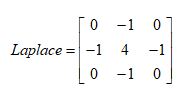

The Laplace operator is a commonly used method for image sharpening. Sharpening can enhance the contrast of the image, highlight the parts with obvious changes in grayscale values, and make relatively blurred lines clear.

A typical Laplacian with kernel size:

Use the operator in the above formula to convolve the following palmprint image.

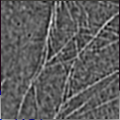

The result after enhancing the palm print is shown in the figure below.

The lines are clearly enhanced and the grayscale value at the lines is much higher than that of the surrounding skin.

Experiments show that the Laplace enhancement operator can effectively enhance the contrast of lines. Therefore, the EDCC algorithm performs Laplace transform on the image before using 2DGabor wavelet filtering on the image.

2DGabor wavelet

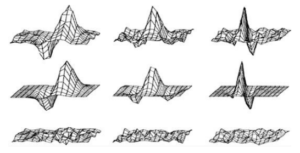

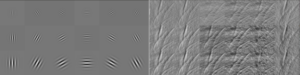

The 2DGabor wavelet filter is very similar to the human visual stimulus response. As shown in the figure below,

The first row is the human visual receptive field, the second row is the Gabor wavelet filter, and the third row is the residual between the two. It can be seen that the two are very similar. Moreover, the Gabor wavelet can change direction and scale, and can adapt well to lines in different directions.

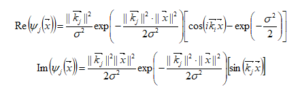

The function form is as follows:

Take 3 scales and 6 directions to form a set of filters, and convolve the palmprint image. The result is shown in the following figure.

It is not difficult to find that the palm lines after 2DGabor wavelet filtering are very clear.

Coding

After convolving the image with a set of 2D Gabor wavelet filters of uniform scale but different directions, the response value corresponding to each pixel of the palmprint image can be obtained. It is not difficult to infer that the filter direction corresponding to the maximum response value can approximately represent the direction of the palmprint line at that point, but it is not the exact direction of the line.

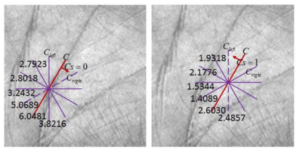

As shown in the figure below, two pixels on the main line of the palmprint image are selected for convolution with a set of 2DGabor wavelet filters with 6 directions, and the corresponding filter response values are calculated. Then the orientation with the maximum filter response is determined as the main direction. It can be seen from the figure that the main directions of (a) and (b) are the same.

In fact, the true main directions of these two pixels are on the left and right of the extracted main direction. This phenomenon can also be found in other parts of the palmprint. Therefore, the main direction extracted based on the maximum filter response cannot accurately represent the true direction of the palmprint.

The method of extracting the main direction of the palmprint based on the filter response is based on the basic assumption that the pixels in the palmprint image belong to a line. When the direction of the filter is the same as the main direction of the palmprint, the filter response will reach its maximum value.

In other words, the filter response is proportional to the degree of overlap between the main line and the filter. However, because of the limited filter directions used in practice, there may be no Gabor filter with the same orientation as the main direction of the palmprint image. As a result, in this case, the extracted direction cannot accurately represent the main directional characteristics of the palmprint.

Generally speaking, the closer the 2DGabor wavelet filter direction is to the main direction of the palmprint, the larger the filter response is. Therefore, extracting the neighboring directions of the main direction (which usually also has a larger filter response) can be combined with the main direction to more accurately represent the palmprint image.

Method

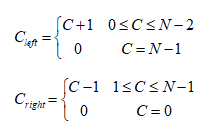

First, a set of 2D Gabor wavelet filters with one scale and N directions are used to convolve the image.

The main direction of a point I (x, y) on the palm line is determined by calculating the filter direction corresponding to the maximum response value of the point. That is:

![]()

Where: C is the main direction of the palmprint image. Let Cleft and Cright represent the directions adjacent to the main direction.

By comparing the response values corresponding to the Cleft and Cright directions, the encoding of the secondary direction Cs is obtained.

Since C represents the main directional feature of the palmprint image and Cs represents the secondary directional feature, the combination of C and Cs can more accurately represent the true direction of the lines.

An example of the calculation of (C, Cs) is shown below.

Matching method

In the palmprint image matching stage, an angular distance similar to but different from the competitive encoding method is used to determine the similarity between two palmprint images.

The matching score between two palmprint images and is defined as:

The video in the capture component has aspect ratio 3:4 for mobile libraries and 9:16 for web components. The FIT mode should be used as it is not recommended to crop the video, as this would result in an image of lower resolution.

Experiment

Verify

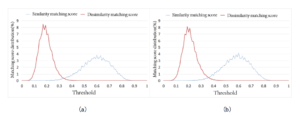

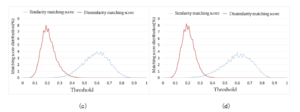

In the palmprint verification step, each palmprint image in the palmprint database is compared with all the remaining images one by one. If the two images are from the same person, it is called a similar match, otherwise it is called a heterogeneous match.

In the multispectral database, each person has 12 palm images, and each spectrum corresponds to a database of 6,000 images, so there are matches, of which there are 33,000 matches of the same type and 17,964,000 matches of different types. In the palm print database of Tongji University, there are 114,000 matches of the same type and 71,880,000 matches of different types.

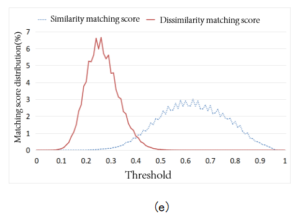

Figure 5.7 shows the distribution of matching scores obtained using the EDCC algorithm on the multispectral database and the Tongji University database. It can be found that the scores of the same type matches are clearly separated from the scores of the different types, and the scores of the same type matches are much higher than the scores of the different types.

(a)-(e) are Red, Green, Blue, NIR, Tongji University database

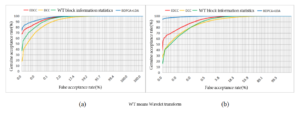

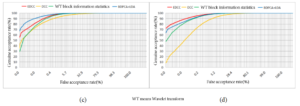

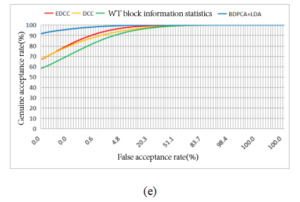

The correct acceptance rate (GAR) and the false acceptance rate (FAR) can evaluate the performance of the algorithm. The ROC curve obtains the corresponding GAR and FAR values by setting all possible thresholds, and draws the curve with the FAR and GAR values as the horizontal and vertical coordinates.

(a)-(e) are Red, Green, Blue, NIR, Tongji University database

The figure above shows the ROC curves of the EDCC algorithm, DCC algorithm, wavelet transform block statistics algorithm and BDPCA+LDA algorithm verified on different databases. Ignoring the BDPCA+LDA algorithm that produces overfitting, it is not difficult to find that when FAR is the same, the EDCC algorithm has the highest GAR.

Equal Error Rate (EER) is the value corresponding to FAR=FRR. Excluding the BDPCA+LDA algorithm with overfitting problem, the EERs of different algorithms are shown in the table below. It can be found that the EDCC algorithm achieves the lowest EER on all databases. Compared with the DCC algorithm, the maximum EER reduction rate of the EDCC algorithm reaches 73% ((5.5105-1.4728)/5.5105), and the average reduction rate is also around 50%.

KBY-AI’s face recognition mobile SDK offers 3 package options for user convenience as outlined in the following table.

| EDCC | DCC | Wavelet transform block information statistics | |

|---|---|---|---|

| Red | 1.2612 | 4.0145 | 2.2772 |

| Green | 1.8715 | 4.7460 | 3.3249 |

| Blue | 1.7455 | 4.5881 | 3.2456 |

| NIR | 1.4728 | 5.5105 | 2.0192 |

| Tongji University | 3.6116 | 5.3703 | 6.6747 |

Identification

Identification refers to determining who an unknown palm print comes from by matching the palm prints in the database one by one.

In the palmprint recognition experiment of this paper, N (N=1,2,3,4) palmprint images of each palm are used as the training set, and the remaining palmprint images are used as the test set. Each image in the test set is compared with the training set one by one to calculate the matching score. The category corresponding to the sample with the highest score in the training set is used as the category of the test image. This method can be used to calculate the error recognition rate of different algorithms under different training set sizes.

(a)-(e) are Red, Green, Blue, NIR, Tongji University database

The experimental results are shown in the figure above. It can be found that the EDCC algorithm can still achieve a high recognition rate when there are fewer training samples, and under the condition of the same number of training samples, the error rate of the EDCC algorithm is significantly lower than that of other algorithms. In other words, the EDCC algorithm can use fewer training samples to obtain a higher recognition accuracy.

Frequently Asked Questions

What is palmprint recognition, and why is it important?

Palmprint recognition is a biometric technology that uses unique patterns on a person’s palm to verify identity, offering a secure and accurate alternative to traditional methods like passwords or PINs.

How does an efficient palmprint recognition algorithm work?

It processes palm images using advanced techniques such as feature extraction, pattern matching, and machine learning to identify unique traits while minimizing computational requirements.

What makes a palmprint recognition algorithm accurate?

High accuracy comes from robust image preprocessing, noise reduction, and precise feature extraction methods that handle variations like lighting and hand positioning effectively.

What are the key applications of palmprint recognition technology?

It is widely used in security systems, access control, banking, and even healthcare for patient identification and authentication.

What challenges do palmprint recognition algorithms face, and how are they addressed?

Challenges like low-quality images and variations in skin texture are addressed using AI-driven enhancements, adaptive thresholding, and multi-modal biometric systems.

Conclusion

In conclusion, efficient and accurate palmprint recognition algorithms are revolutionizing biometric authentication by combining advanced computational techniques with unparalleled precision, paving the way for secure and reliable identity verification across diverse applications.