Face comparison has increasingly evolved into one of the central elements of modern identity verification systems.

Its primary purpose is to enable organizations, institutions, and service providers to confirm whether the live face of an individual corresponds accurately to the portrait photo stored in their government-issued identification documents, databases, or other trusted records. This process, once considered somewhat specialized, has now become a routine part of many authentication workflows and is more widely applied today than at any time in the past. With this rise in adoption, it has naturally also become a subject of growing curiosity and discussion among both professionals and the general public.

This leads to a set of important questions: What exactly takes place during a facial comparison procedure? In which industries and scenarios is this technology being deployed? How does it differ from related concepts such as face matching, face detection, and face recognition, which are often mistakenly used interchangeably?

The sections that follow will address these questions in detail and provide clarity on how face comparison works and why it plays such a critical role in modern security and identity management.

What is face comparison?

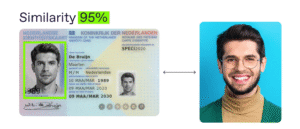

Face comparison can be described as a one-to-one biometric verification procedure designed to determine whether two facial images correspond to the same individual. In essence, the process measures the degree of similarity between a pair of images and produces a decision about whether they represent the same human face.

Within the context of digital identity verification, this typically involves taking a live-captured selfie of the user and comparing it against the portrait photograph that has been extracted from a trusted source, such as a government-issued identity document, a secure account profile, or an institutional record.

The underlying engine responsible for this task does more than a simple visual check. Both facial images are first converted into mathematical representations known as templates, which encode the distinctive features and geometry of the face in a standardized format.

Once these templates are generated, the system calculates a similarity score that quantifies how closely the two sets of facial features align with one another. Finally, this score is evaluated against a predefined threshold value, and based on that comparison, the system issues a binary outcome—commonly expressed as either “yes,” indicating a confirmed match, or “no,” indicating that the faces do not belong to the same person.

Key differences between related terms

The identity verification industry is rapidly evolving, and with that evolution comes an increasing number of specialized methods for using a person’s face as a central component in authentication processes. What was once a relatively straightforward application—simply checking whether two photos belong to the same individual—has now expanded into a diverse set of approaches and technologies, each tailored to different use cases, levels of security, and user experiences.

As a result of this growing sophistication, the industry has also generated a wide range of terminology to describe these processes. While this vocabulary is meant to provide clarity, in practice it often has the opposite effect, creating overlaps, ambiguities, and misunderstandings. Even professionals and practitioners who work with biometric systems on a daily basis can sometimes find themselves puzzled by the distinctions—or lack thereof—between certain terms.

To make sense of this complexity, it is worth taking a step back and addressing some of the most common areas of confusion. In the following sections, we will unpack these gray areas and explain them in straightforward terms, helping to separate concepts that are frequently conflated and ensuring that their differences are clearly understood.

Face comparison vs face detection

Face detection serves as the starting point in most face-related biometric systems. Its role is purely to act as a locator: it scans an image or video frame, identifies the presence of a human face, and returns a bounding box around it along with key facial landmarks such as the eyes, nose, and mouth. Importantly, face detection does not attempt to determine who the person is or whether they match anyone else—it is only about finding and marking the face in a given scene.

Facial comparison, in contrast, goes beyond locating. It is a decision-making process that evaluates whether two detected faces belong to the same individual. The system converts each face into a mathematical template, compares the similarity between those templates, and then produces a score.

This score is assessed against a threshold, and the outcome is typically expressed as either a “yes,” confirming that the two faces match, or a “no,” indicating that they do not. Within the context of identity verification, this comparison most often involves checking a live selfie submitted by a user against the portrait image extracted from their government-issued ID document.

To highlight the distinction more clearly, here is a side-by-side comparison:

| Aspect | Face Detection | Face Comparison |

|---|---|---|

| Purpose | Finds and locates a face in an image or video | Determines whether two faces belong to the same individual |

| Output | Bounding box + landmarks (eyes, nose, mouth, etc.) | Binary decision (“yes” = match, “no” = not a match) plus similarity score |

| Identity Check | None – does not establish or verify identity | Yes – explicitly checks if two faces represent the same person |

| Process | Scans input, detects face regions | Converts faces into templates, compares similarity, applies threshold |

| Typical Use Case | Preprocessing step for face recognition or analysis | Identity verification (e.g., selfie vs. ID photo comparison) |

Face comparison vs face matching

The term face matching is somewhat complex and often used in multiple ways depending on the context, which can make it a source of confusion. In many cases, people use face matching as a synonym for face comparison, referring to a straightforward one-to-one (1:1) verification process where two images are evaluated to determine if they belong to the same individual.

However, in other implementations, the phrase carries a more nuanced meaning. It may describe an intermediate step in which a probe image—often a live selfie or a captured frame—is evaluated against several stored portraits or frames. The purpose of this stage is not to make a final decision, but rather to identify the most suitable candidate image that will later be used in a dedicated facial comparison step.

By contrast, the term face comparison is typically reserved for the final verification stage. Here, only a single pair of images is considered: the probe image and the selected reference image. The system then generates templates for both, measures their similarity, and delivers a definitive binary outcome—either a “yes,” confirming that they represent the same person, or a “no,” indicating a mismatch.

In this way, a single identity verification product can incorporate both concepts as separate but connected components within the same workflow. Face matching functions as a pre-selection mechanism, narrowing down multiple possible reference images to the most relevant candidate. Face comparison then operates on that selected pair to render the conclusive verification decision.

Together, these processes ensure both efficiency and accuracy in confirming a user’s identity.

Face comparison vs face recognition

Face comparison is a strictly one-to-one (1:1) biometric verification process. In a typical identity verification scenario, the individual provides a live selfie, and the system extracts the portrait photo from their official identity document. The face comparison engine then analyzes these two images and determines whether they belong to the same person.

This approach involves no gallery of stored identities, no ranking of candidates, and no ambiguity about consent—since the user is directly participating by presenting their document and selfie. The result is a clear and straightforward “match” or “no match” decision.

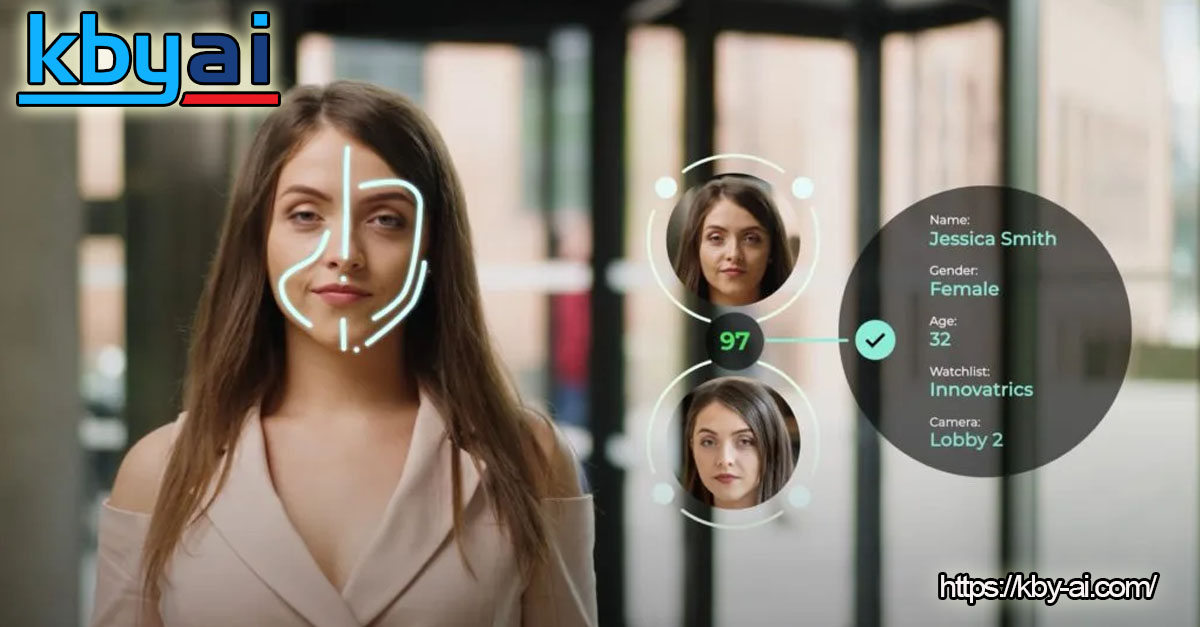

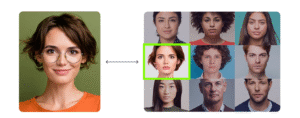

Facial recognition, by contrast, operates on a one-to-many (1:N) model. Instead of comparing a probe image against just one reference portrait, it evaluates the probe against an entire gallery of stored faces, such as a watchlist, employee database, or national registry. The system then produces a ranked list of candidates, indicating the most likely matches.

Because of this, facial recognition requires additional infrastructure beyond what face comparison needs: gallery management, indexing of large datasets, and specialized recognition algorithms optimized for large-scale search rather than simple verification.

Some identity verification (IDV) vendors offer support for both 1:1 comparison and 1:N recognition, and when they do, they often emphasize the importance of distinguishing between the two functionalities. For example, KBY-AI deliberately uses the term face matching to describe its 1:1 verification feature, while reserving face recognition to refer exclusively to its 1:N search capabilities.

This clarity helps customers understand whether the system is confirming an identity through direct comparison, or attempting to establish one from a broader set of possible matches.

How face comparison works

A standard face comparison process can generally be divided into several well-defined stages, each contributing to the overall reliability and security of the system.

Step 1. Capture and quality control:

At the very beginning, the system provides on-screen guidance that assists the user in properly positioning themselves — helping them hold the camera steady, maintain eye contact with the lens, and adopt a neutral facial expression for consistency. During this stage, automated checks evaluate image quality parameters such as brightness, focus sharpness, head pose, and proportional head size within the frame. On the document side, the software identifies the portrait area, isolates it accurately, and performs cropping to ensure that both human and document images meet the same technical standards before proceeding.

Step 2. Face detection:

Next, a robust face detection algorithm locates the person’s face and identifies landmark points (eyes, nose, mouth, etc.) to achieve consistent and reliable cropping. This step is critical because even the most sophisticated face-matching models can perform poorly if essential facial regions are cut off or misaligned. Precise detection ensures that subsequent recognition and comparison are based on correctly framed facial data.

Step 3. Liveness detection:

At this stage, the system must determine whether it is interacting with a real, live individual instead of a spoof attempt such as a photograph, video replay, digital injection, or physical mask. Liveness detection can include both active and passive techniques. Active liveness detection typically requests the user to perform certain movements—like turning their head, blinking, or smiling—while passive approaches unobtrusively analyze the captured video stream for signs of natural texture, micro-expressions, and other subtle cues of real human presence. Advanced SDKs such as KBY-AI’s Face SDK support either or both modes, depending on deployment requirements and available hardware.

Step 4. Template creation and comparison:

After confirming liveness, the next operation is feature extraction, where the system generates a compact numerical template (embedding) from each face crop using a deep learning–based recognition algorithm. These templates are then compared mathematically, with the similarity score and relevant metadata recorded for audit or monitoring purposes. The resulting match score quantifies how likely it is that both faces belong to the same individual.

Step 5. Outcome handling:

Based on the computed score, the system determines the next action. If the similarity falls below a predefined threshold due to poor image quality—such as excessive blur, reflections, or non-frontal pose—the user can be prompted to recapture the image. If Presentation Attack Detection (PAD) or injection defense mechanisms identify a potential spoof, the verification session is immediately blocked. For cases that fall near the decision boundary, organizations often employ manual review or secondary verification, depending on their risk appetite, compliance policies, and jurisdictional regulations.

Step 6. Calibration, security, and privacy management:

Behind the scenes, ongoing calibration ensures that the system maintains an optimal balance between false accepts and false rejects across different real-world scenarios. Security and privacy remain paramount: biometric templates are encrypted at rest, stored with strict key rotation practices, and retained only for as long as necessary to fulfill a defined business or regulatory purpose. Continuous performance monitoring and data governance help ensure that the entire facial comparison pipeline remains accurate, fair, and compliant.

Face comparison use cases

Face comparison technology has now progressed from being a niche innovation to becoming a widely adopted mainstream solution, seamlessly integrated into a broad spectrum of industries and everyday processes. Its versatility and reliability have made it indispensable in identity verification, fraud prevention, and seamless user experiences across multiple sectors, including the following:

Banking, fintech, and cryptocurrency:

Traditional banks, digital-first neobanks, and cryptocurrency exchanges increasingly depend on face comparison technology during customer onboarding to securely verify that a selfie matches the portrait embedded in an identity document once document validation has been completed. Beyond onboarding, the same verification systems are leveraged during critical account activities such as device changes, large payouts, or sessions that appear unusual or high-risk. To balance convenience and security, financial institutions often adopt adaptive thresholds—using stricter matching criteria for the first payout or new device login, and slightly more lenient parameters for returning customers who consistently use trusted devices.

Travel, border control, and aviation:

At airports and border crossings, self-service eGates and airline check-in kiosks routinely conduct one-to-one (1:1) face comparisons between a live-captured image and the portrait stored in the traveler’s ePassport chip. Because these environments typically feature controlled lighting, fixed camera angles, and standardized workflows, the resulting comparisons achieve high accuracy even during brief user interactions, helping reduce queues while maintaining stringent border security standards.

Government services and digital identity programs:

Public-sector platforms and national digital ID systems increasingly utilize face comparison algorithms to securely link citizens’ online accounts with verified identity records. This ensures that users accessing government services—such as tax filing, benefit applications, or address updates—are the rightful owners of the associated identity. The technology provides a fast, user-friendly, and secure layer of authentication that strengthens trust between citizens and digital public infrastructure.

Online gambling and gaming platforms:

In industries where user authenticity and age verification are mandatory, face comparison plays a critical role. During registration, funding, and withdrawal processes, the technology confirms that the individual performing the transaction is the same person who initially created and verified the account. This mitigates account takeovers and underage gambling risks while streamlining compliance with KYC and AML regulations.

E-commerce and digital marketplaces:

Major e-commerce platforms and gig economy marketplaces employ biometric face comparison during seller onboarding, high-value payment disbursements, and account recovery procedures. This additional verification step helps prevent impersonation and fraud, ensuring that payouts are made only to legitimate sellers and service providers. It also contributes to user confidence by maintaining a secure and transparent marketplace ecosystem.

Education, remote proctoring, and online examinations:

As online learning and remote testing expand globally, educational institutions and testing providers have begun incorporating face comparison technology into student onboarding and exam check-in procedures. By matching a live selfie with a pre-registered ID photo, systems can confirm that the correct candidate is participating, effectively reducing impersonation and exam fraud while maintaining a smooth, automated user experience.

Telecommunications and eSIM provisioning:

Telecom operators increasingly depend on face comparison when registering prepaid SIM cards, transferring phone numbers, or activating eSIMs. By verifying that a live selfie corresponds to the individual in the submitted ID document, providers can comply with national identity regulations and reduce SIM-related fraud without burdening legitimate users.

Workplace access, facilities, and visitor management:

In secure environments, face comparison acts as a reliable gatekeeper for physical access control systems. Turnstiles, kiosks, and visitor check-in terminals use live facial captures to verify individuals against pre-consented reference templates stored within an access management database. When facilities also utilize ID badges or QR credentials, facial comparison adds a second layer of identity assurance—especially for high-security areas, server rooms, or temporary visitor access points—thereby combining convenience with enhanced protection.

Frequently Asked Questions (FAQ)

1. What is face comparison?

Face comparison is a technology that analyzes and compares two facial images to determine if they belong to the same person.

2. How accurate is face comparison?

Accuracy varies by technology and conditions, but modern systems can achieve very high accuracy, often over 95% in controlled environments.

3. Where is face comparison used?

It’s commonly used in banking, travel, security checks, device authentication, and identity verification for online services.

4. Is face comparison safe and secure?

Yes, reputable systems use encryption and comply with privacy regulations to protect user data.

5. Can face comparison work with photos from different angles or lighting?

Advanced systems can handle some variation in angle and lighting, but extreme differences may reduce accuracy.

Conclusion

Face comparison represents a straightforward yet highly precise decision-making process that fundamentally relies on analyzing two inputs: a live-captured image and a reference image from an official document or pre-enrolled template. This technology forms the cornerstone of identity verification workflows across a wide variety of sectors, including finance, telecommunications, travel, government and public services, as well as workplace and facility access systems.

When the captured images are of high quality, liveness detection is robust, and the matching algorithms are properly calibrated, biometric facial comparison delivers a powerful layer of security. At the same time, it does so without introducing unnecessary friction for legitimate users, striking an effective balance between strong protection against fraud and a smooth, user-friendly experience.